Intel Xe Graphics Preview v2.0

Intel is developing discrete GPUs for gamers, professionals, and servers, and they're all slated for release this year or coming in 2022. Intel's cards will either be the long-awaited saviors of a stagnant marketplace, or they'll underperform and flop miserably (no pressure, Intel PR person reading this). Personally, I'g happy either way: we get skillful GPUs, or nosotros get some good stuff to make fun of.

This is our second circular of investigation into Xe, every bit quite a chip has transpired in the last few months. To chop-chop recap here's a timeline of the major announcements Intel has fabricated since the moment they went public with Xe'south evolution:

- November 8, 2022: Raja Koduri quits his chore running AMD'due south GPU section and joins Intel, becoming their Senior VP of cadre and visual computing. His get-go act is to rent a half-dozen quondam buddies from within AMD'south ranks.

- June 12, 2022: And then-CEO Brain Krzanich reveals to Intel's investors that they've been designing an Chill Audio discrete GPU architecture for years, and they programme on releasing it in 2022.

- January 8, 2022: Senior VP of client computing Gregory Bryant confirms at CES that Intel'due south commencement round of GPUs volition arrive on the 10nm node.

- May ane, 2022: Jim Jeffers, senior principle engineer and director of the rendering and visualization team, announces Xe's ray tracing capacity at FMX19.

- Nov 17, 2022: Raja Koduri reveals Xe will come in three flavors, high-performance, low-power, and high-performance compute. He says the start GPU in the latter category will be Ponte Vecchio, coming in 2022 on the 7nm node.

- January nine, 2022: The first images of the Discrete Graphics One Software Evolution Vehicle (DG1 SDV) are published, showing a small RGB-infused menu helping developers optimize their software for the Xe architecture.

And soon…

- March 17, 2022: Senior developer relations engineer Antoine Cohade will "provide a detailed tour of the hardware architecture" and the "operation implications" of Xe at GDC.

The official narrative spins a tale of Intel hard at piece of work building mysterious GPUs infused with many desirable features; meliorate nodes, ray tracing, new packaging techniques. Simply yous and I both know it's not the gimmicks that make a GPU, just the horsepower and greenbacks involved. That's what this article is well-nigh.

Compages

A good compages starts with one brick, and then do GPUs… except for Intel's. AMD and Nvidia'due south cores perform 1 functioning per clock, simply Intel'south execution units (EUs) perform eight. Despite the technical inaccuracies, all the same, we're going to describe one Eu as being equivalent to 8 cores for comparison purposes.

Apart from Intel's demand to build with 8 bricks at a fourth dimension, their construction techniques are straightforward. They can throw a few bricks together and brand a wall. A few walls and you lot become a room, chuck a couple of those together and you tin can brand an apartment.

Skipping the intermediary steps, Xe'southward largest self-contained unit of measurement (the apartment) is chosen a slice and each one contains 512 or 768 cores, for loftier-performance and low-power slices, respectively. One apartment is all you need, and then the low-ability cards employ simply one slice. Just if yous don't want to settle in that location, Intel is building skyscraper-style enthusiast GPUs fabricated of many slices.

That's all you need to know well-nigh the Xe architecture to grasp what's going on, but if you lot can speak some technobabble and like numbers, don't skip this adjacent bit.

In Gen11, Intel's integrated GPUs had one piece fabricated of 8 sub-slices, which in plow had eight execution units each. They've rejigged this slightly for Gen12 (Xe'south first-gen) and are including compute units (CUs) along with changes to the return backend.

In September, code accidentally uploaded to GitHub leaked the configurations of DG1, Ponte Vecchio, and one DG2 variant. This leak is reliable, as its counter-intuitive prediction that Ponte Vecchio will have two slices was proven correct. Its prediction that DG1 volition have half-dozen sub-slices per slice and thus 96 EUs was also more or less confirmed by an EEC filing that gives the aforementioned number.

The leak reveals that in all their Gen12 models, Intel has xvi EUs per sub-piece, and in Ponte Vecchio specifically, four sub-slices per slice. Koduri later revealed that Ponte Vecchio has two slices and sixteen CUs.

That'south enough data to say that Ponte Vecchio probably works similar this: eight EUs are combined into a CU (64 cores), which are paired into a sub-slice (128 cores/16 EUs), four of which make one slice (512 cores/64 EUs). With 2 slices that means that Ponte Vecchio has 128 EUs, 1024 cores. Note, the two-piece configuration may exist just for prototypes.

Ponte Vecchio's basic slice configuration is expected to exist used across high-performance and low-power models every bit well.

DG2: Loftier-Performance

The high-operation microarchitecture, codenamed Discrete Graphics Ii (DG2), envelops the mid-range and enthusiast GPU markets. It's these cards that'll accept the ray tracing and RGB bling, but what's most heady is the potential for Intel to claiming Nvidia's stranglehold on the premium $600+ range.

"Xe HP … would easily exist the largest silicon designed in Bharat and among the largest anywhere." - Raja Koduri

Last July, Intel accidentally published a commuter (thanks!) that contained three DG2 codenames, iDG2HP128, iDG2HP256, and iDG2HP512. Making the reasonable assumption that the three digits at the end bespeak the carte'due south number of EUs, then they'll have 1024, 2048 and 4096 cores, respectively. That's two, 4 and eight slices.

Not long afterwards, however, nosotros saw solid evidence of a three-slice GPU with 1536 cores being developed likewise. Given information technology would exist casuistic for Intel to develop a fourth card spec'd so similarly to existing models, it'southward rubber to presume this is an iDG2HP256 with ane slice disabled. This supports widespread suspicions that Intel is taking the 3 fundamental models and disabling one or more slices to add fourth, fifth, sixth or even 7th models to their line-up.

| # of Slices | ane | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| Cadre Count | 768* | 1024 | 1536 | 2048 | 2560 | 3072 | 3584 | 4096 |

| Codename | iDG1LPDEV | iDG2HP128 | iDG2HP256 | iDG2HP512 |

DG2 will as well be more than than simply gaming GPUs. They won't be able to handle scientific workloads like Ponte Vecchio, but if they're good value on release, they could certainly be remarketed with professional person drivers as video editing or 3D modeling hardware, like Nvidia's Quadro cards.

DG1: Depression-Power

The depression-power segment is defined as just that, 5W through to 50W. 5W to 20W for integrated GPUs, and 20W to 50W for discrete ones.

Intel has already introduced the states to the commencement member of the LP family unit. The DG1 SDV was prominently displayed at CES 2022, running Destiny two and Warframe with RGB and all. Simply it'south just dressing up as a gaming carte du jour. The DG1 SDV is a developer-only edition designed to assist out with transitioning software and drivers to the Xe platform.

Nevertheless, that doesn't mean y'all won't eventually be able to buy something fairly similar – Intel has already shown information technology running in a laptop.

Integrated forms of the LP GPU are reported to have between 64 and 768 cores, while discrete LP GPUs exclusively wield the full 768 cores. That'south a comparable number of cores to AMD's best integrated hardware, and Nvidia's lowest-terminate discrete GPUs. But where Xe LP might outshine them is in clock speeds.

A leaked Geekbench run of a Rocket Lake mobile processor has shown an integrated 768 core LP GPU running at 1.5 GHz, netting information technology 2.3 TFLOPs. That'south the aforementioned corporeality of operation equally a GTX 1650. Even assuming the worst, that the 1.5 GHz uses the total 20W TDP and Intel won't be able to push speeds even 1 MHz higher earlier release, that's impressive.

Just imagine how efficient this processor must be. The GTX 1650 has slightly fewer TFLOPs and has a 75W TDP: almost four times as much. An LP GPU pushed to 50W will boost clock speeds higher and could enter the aforementioned functioning bracket as a GTX 1660.

But the proficient stuff doesn't stop there. Updates to the Linux kernel show Intel is planning a way to run integrated and discrete graphics meantime and potentially in conjunction. If this pans out, the full power of an iGPU could exist paired with the discrete GPU's power to create a 1536 cadre philharmonic GPU that is space-efficient and cost-constructive. It's an fantabulous fashion to squeeze more operation out of the same silicon.

Ponte Vecchio: Data Compute

When I said in the introduction that only the raw horsepower of a GPU mattered, I lied intro clickbait confirmed. That'south not the case for whatsoever information center GPU, and Ponte Vecchio in particular. Ponte Vecchio is all about the tricks and techniques that maximize efficiency.

Fun Fact:

Koduri named Ponte Vecchio after the bridge in Florence because he likes the gelato there.

Ponte Vecchio was created specifically with the Aurora supercomputer in mind, which should give you an indication of the type of workloads it will exist optimized for.

If it didn't give you an indication, then I'll spell it out: double precision. It'south basically the first thing on the list for every information center GPU, and Koduri spent a lot of his time discussing information technology during the reveal. Unfortunately, however, the just number he would put to it is Ponte Vecchio'south per EU theoretical FP64 performance, which is ~40x that of Gen11's.

Doing some dorsum of a napkin math, that'due south well-nigh 20 TFLOPs at FP64 per 1024 core carte du jour. Don't take that equally gospel though, because there aren't enough pregnant figures in the adding to yield meaningful results.

Second to high precision workloads, is, naturally, ultra-low precision work. Ponte Vecchio supports INT8, BF16, and the usual FP8 and FP16 for AI neural network processing. Each EU is outfitted with a matrix engine (like an Nvidia Tensor core) that is 32x faster than a standard European union for matrix processing.

However, none of that is particularly novel. Ponte Vecchio'south true strength is in its retention subsystem, which lets the GPU tackle problems in new ways.

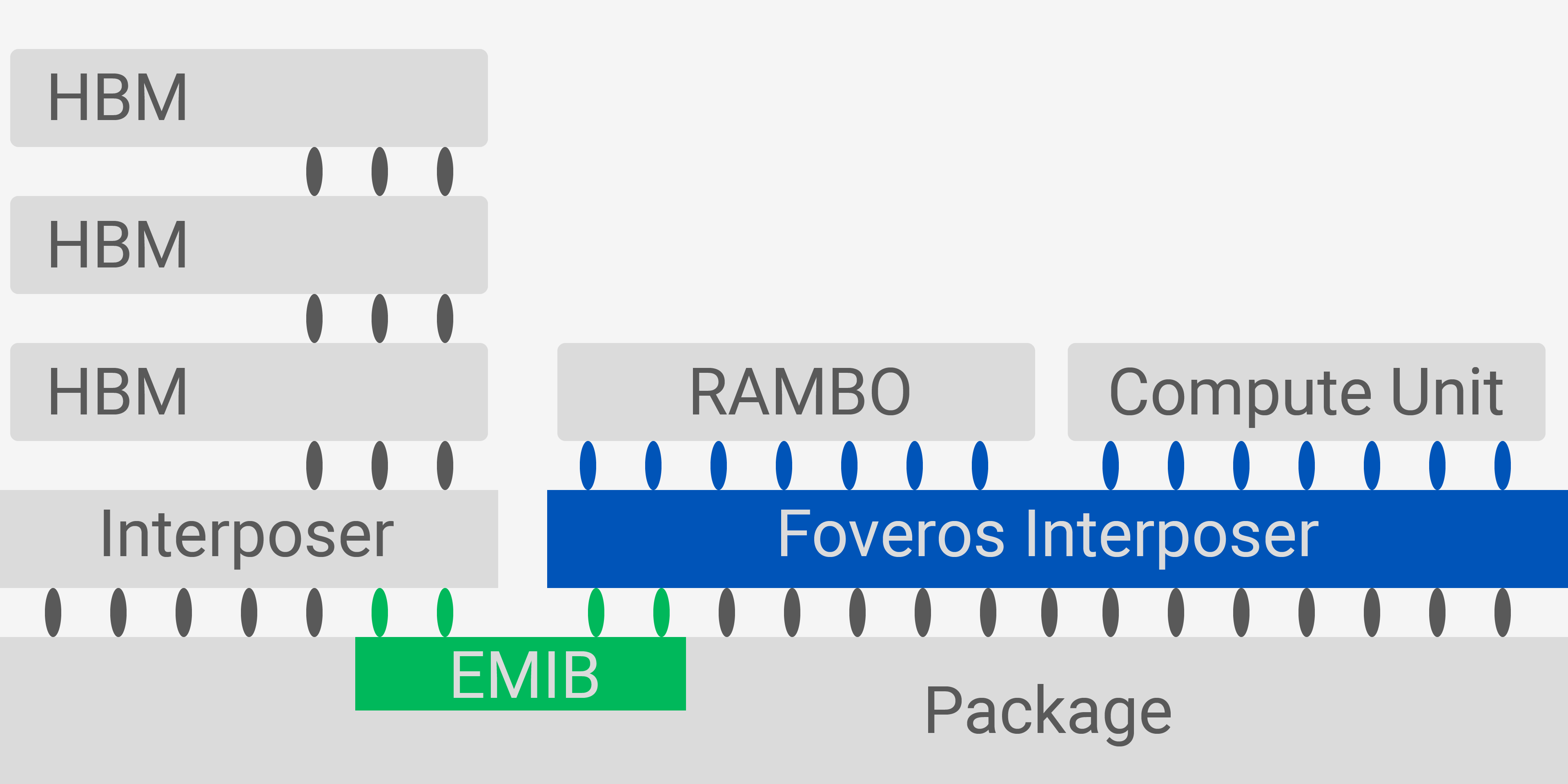

To practise then, Ponte Vecchio leverages Intel's pivotal new interconnect technologies, Foveros and EMIB (embedded multi-dice interconnect bridge). Foveros uses through-silicon vias to stack multiple chips on top of an active interposer die, giving them on-chip like speeds but off-scrap connectivity. In comparing, EMIB is a 'dumb' connection between two chips that uses an inactive dice but offers high bandwidth at a lower cost.

EMIB and Foveros

EMIB is used to connect the GPU'southward compute hardware direct to the HBM, netting Ponte Vecchio spectacular memory bandwidth. Foveros is used to connect the ii CUs on a sub-slice to ane chiplet of RAMBO enshroud, Intel's new super cache. Thanks to Foveros, RAMBO doesn't have any limitations imposed upon its capacity or footprint, and it can bypass the CUs when sending/receiving data from the HBM or other sub-slices.

Having a gigantic cache – and by gigantic I mean gigantic, Intel'southward diagrams show a RAMBO chiplet equally existence the same size every bit a CU – is manifestly really expensive, but it unlocks some nifty options. In neural network processing, for case, RAMBO can shop matrices an order of magnitude larger than other GPU caches. Other GPUs lose performance as matrices get larger and the level of precision increases, but Ponte Vecchio is able to sustain superlative performance.

Ponte Vecchio

The RAMBO cache also powers the Xe Memory Fabric, a spiderweb of connections and technologies that pools resources from every GPU and CPU in a server node. Every GPU's RAMBO cache is combined into ane banking concern available to everything, with the slowest connection beingness the CPUs' at 63 GB/s over PCIe 5.0.

At their contempo yearly earnings investor meeting, Intel confirmed that Ponte Vecchio will begin shipping during the fourth quarter of 2022. Information technology's unclear if that refers to a full release or an exclusive early on launch for the Aurora supercomputer.

Software

Hardware is good and all, only completely useless without acceptable software back up. And the threshold is pretty high: if fifty-fifty one% of games aren't properly supported, millions of gamers are alienated. The practiced news is Intel seems to be doing their best.

Intel is redesigning its everyman level of software, the education set compages (ISA), for modern high-performance applications. "Gen12 is planned to include one of the most in-depth reworks of the Intel European union ISA since the original i965. The encoding of almost every instruction field, hardware opcode and register type needs to be updated."

At the driver level, Intel has a long fashion to become but is making progress. Their integrated GPU drivers aren't updated as frequently as their competitors', with the mean fourth dimension betwixt the last ten updates being 26 days for Intel versus fourteen days for Nvidia and 12 days for AMD. Only their stability and support did improve a lot during 2022, and 275 new titles were optimized for Intel's compages.

Intel's consumer-facing software, on the other mitt, is superb. Their recently released Graphics Command Eye provides significantly more command than Nvidia's GeForce Experience, for case, and is easier to utilise. Like GeForce Experience, it can optimize games for particular hardware configurations, but it also explains what each setting does and how much of a performance impact information technology will have. Commuter control is pleasantly straightforward.

The Command Center is unique in providing advanced display controls also. It offers painless multi-display set up and refresh rate and rotation syncing, along with thorough options to adjust color styling. I personally use it to command my system, despite running Nvidia hardware.

As a bonus, Intel also supports variable refresh rate, so Xe products will support FreeSync and G-Sync monitors.

Release

While Intel is existence a bit coy near what they'll announce at GDC in March, there'southward a good adventure we're looking at a full reveal. If that's the example, so we can expect a release in the subsequent months. The almost likely candidate is June.

Terminal October, Koduri tweeted a not-then-subtle hint in the form of an epitome of his new numberplate. It reads "Think Xe" and has a June 2022 date. He is refusing to annotate on whether the appointment has any significance or not, which suggests it probably does.

One advantage of leaking a date in this style is that information technology tells the community what to expect, without building then much excitement that fans will get aroused if the GPUs arrive in July instead. So consider it a blurry target; Intel is probably aiming for a June release (in fourth dimension for Computex), but information technology might take a little longer depending on how things are going.

Intel is hinting at some pretty absurd stuff and we remain hopeful virtually having a third major player in the graphics arena. But until information technology's non time we can't exist anything more than cautiously optimistic.

Shopping Shortcuts:

- AMD Radeon RX 5700 XT on Amazon, Google Express

- AMD Radeon RX 5700 on Amazon, Google Limited

- GeForce RTX 2070 Super on Amazon, Google Express

- GeForce RTX 2060 Super on Amazon, Google Express

- GeForce RTX 2080 Ti on Amazon, Google Express

- AMD Ryzen ix 3900X on Amazon, Google Express

- AMD Ryzen five 3600 on Amazon, Google Express

- AMD Ryzen five 2600X on Amazon, Google Express

Source: https://www.techspot.com/article/1975-intel-xe-preview-v2/

Posted by: batesliented1948.blogspot.com

- Richart

- Richart

0 Response to "Intel Xe Graphics Preview v2.0"

Post a Comment